Building AI microservices with LangChain: flexibility to plug in LLMs on the fly

Since the debut of ChatGPT, there has been a surge in enthusiasm to craft AI-driven applications or extend existing ones with AI capabilities. From chatbots and virtual assistants to documentation parsers and content generators, AI has reshaped the landscape of many industries. This momentum has not only accelerated advancements in Large Language Models (LLMs), e.g. GPT-4, Bard, or LLaMA, but also spurred the evolution of AI frameworks tailored for seamless integration of these LLMs.

LangChain is a great example of such a (open-source) framework, and one that we’ve used extensively with several clients. It provides high-level abstractions that shield developers from the low-level details of the underlying LLMs; a crucial aspect for flexibility, resulting in vendor agnostic AI applications. Consequently, swapping out OpenAI’s GPT-4 for Google’s Bard requires minimal changes and development effort. Furthermore, LangChain also supports output validation and prompt templates out-of-the-box, applying best prompt engineering practices under the hood so that we can create robust prompts that result in deterministic outputs.

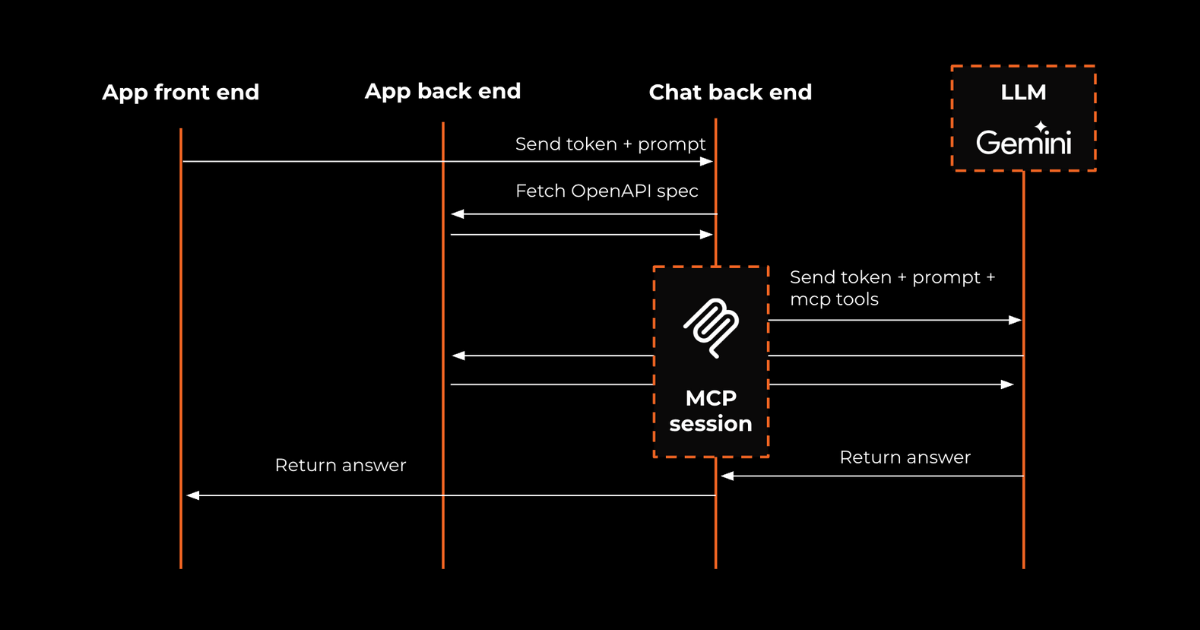

While combining LangChain with serverless computing, we’ve had huge success in building AI-microservices that scale and integrate with any existing backend codebase, regardless of programming language. Whereas LangChain requires Python or Typescript, we decouple it from the rest of the backend (see Figure 1) by encapsulating it into a serverless function (AWS Lambda, GCP Cloud function, …) with compatible runtime. As such, it integrates seamlessly with the rest of the architecture, and can be called either synchronously by exposing a REST endpoint, or asynchronously through message queues or pub/sub messaging, depending on specific use case requirements. As cloud service providers continue to innovate in the AI space, we expect to see a wide offering of managed cloud-native AI services that could further reduce the overall development and infrastructure costs.

Moving forward, we are seeing a broad spectrum of LLMs that are currently being designed for specific application domains, e.g. MedPaLM-2 for healthcare. While that’s certainly no reason to wait with building AI applications today, it’s evermore important to keep flexibility in mind to minimize the hurdles of switching LLMs down the road. Frameworks like LangChain are a powerful tool that facilitate just that, but they cannot (yet?) choose the right LLM for the job: this requires periodic evaluation for the best available technology against application-specific requirements.

Interested to hear more about our approach? Never hesitate to reach out at hello@panenco.com.

See also

Let's build. Together!

Are you looking for an entrepreneurial product development partner? Never hesitate to schedule a virtual coffee.

.png)

.png)